Let's say you've decided to modernize your DevOps and are interested in AWS, but aren't quite sold on how easy it's going to be to automate your development pipeline to it.

Well, I'm here to tell you it's easier than you think.

But wait, there's more! It'll also make your day-to-day development cadence much more agile and available to respond to the patterns that drive your business, compared to being beholden to your multi-step development process.

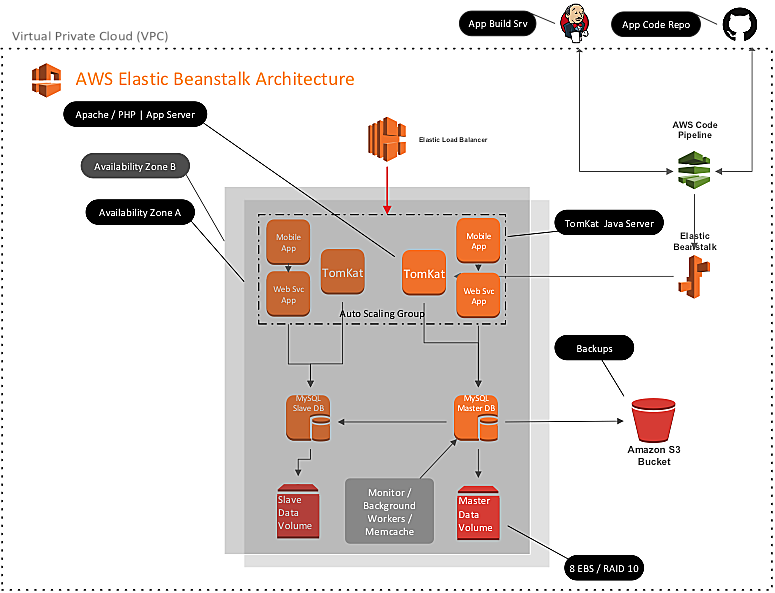

How, you ask? The following diagram presents an idea of what we'll be covering today:

Most application deployment pipelines start with a server that will compile their application code and then use a build server like Jenkins to package that compiled code into a deployable artifact.

Most application deployment pipelines start with a server that will compile their application code and then use a build server like Jenkins to package that compiled code into a deployable artifact.

This process is exactly the same within the Amazon public cloud. Your Jenkins build service is best based in the public cloud, so it's easier to integrate with the AWS API, but beyond that, it's the exact same pattern and process.

Where the paths diverge is being able to leverage AWS CodePipeline to automate the process through the AWS cloud instead of having to depend on a grouping of plugins and a Jenkins configuration to enable the automation.

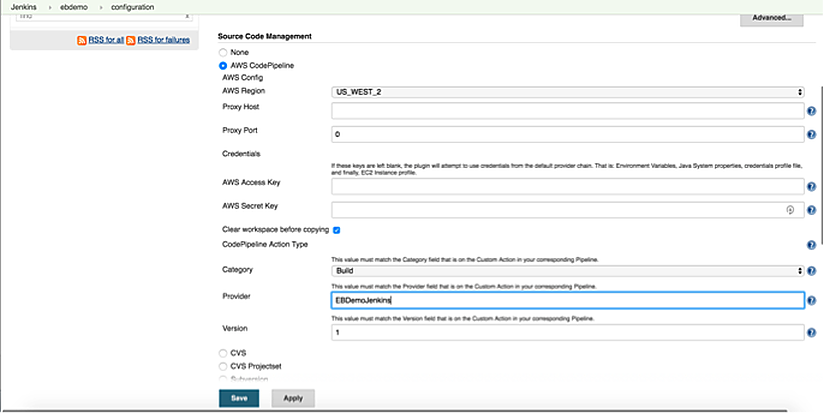

The first step is to configure the AWS Code Pipeline plugin in Jenkins to handle zipping the code from your favorite repo (GitHub, in our example) and then pass it to Jenkins to do its build process (Maven, in our example).

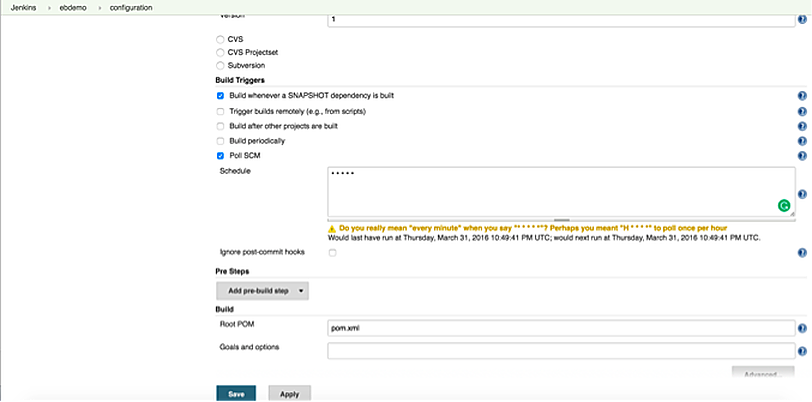

The next step is to configure the triggers section so the code pipeline can initialize after a change is made to the master branch of your code repository.

The next step is to configure the triggers section so the code pipeline can initialize after a change is made to the master branch of your code repository.

The last step of the Jenkins CodePipeline configuration is the post build action, where you will specify the AWS service endpoint you will be publishing to, in our case AWS Elastic Beanstalk.

The last step of the Jenkins CodePipeline configuration is the post build action, where you will specify the AWS service endpoint you will be publishing to, in our case AWS Elastic Beanstalk.

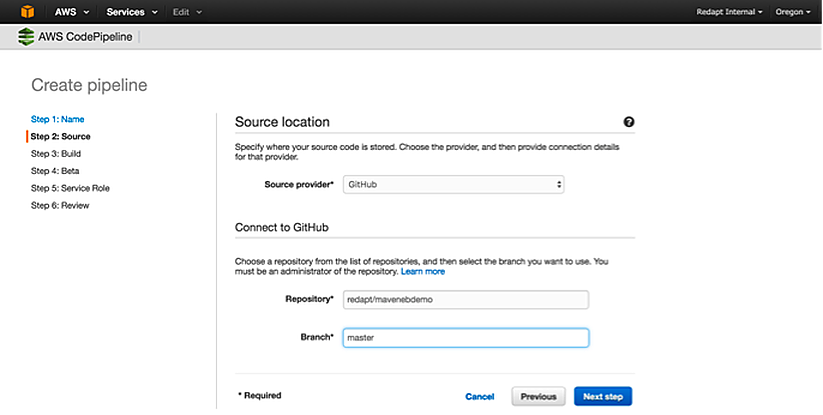

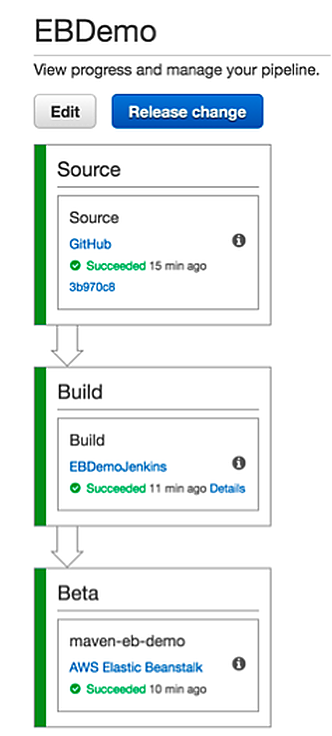

Now, the interesting stuff. Start creating your deployment pipeline inside AWS CodePipeline service.

Log into the AWS console, choose the CodePipeline service, and then create a new pipeline. You'll want to swing the source code configuration to the branch you're going to use with Amazon.

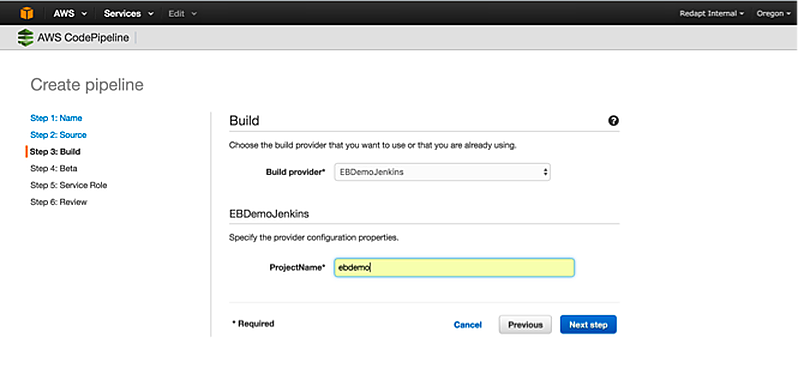

Next, point the build process at that Jenkins server you just configured.

Next, point the build process at that Jenkins server you just configured.

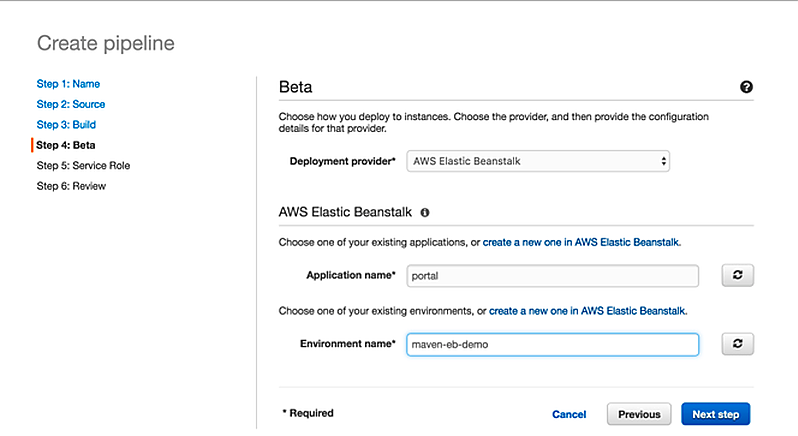

Then configure the endpoint where you'd like your application to deploy. AWS offers a lot of options, but in our example, we're using a simple Elastic Beanstalk application.

Then configure the endpoint where you'd like your application to deploy. AWS offers a lot of options, but in our example, we're using a simple Elastic Beanstalk application.

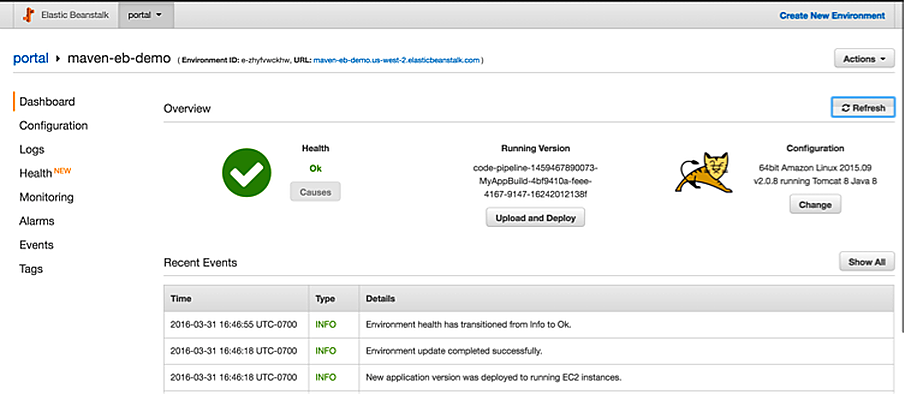

And voila! It's as easy as that. Of course, I've excluded a good number of the technical steps in each part to get it working, but it's not anything a DevOps admin with an available afternoon and some patience couldn't stand up. If you'd like a more detailed walkthrough, Amazon took the time to provide a good one with Elastic Beanstalk here.

And voila! It's as easy as that. Of course, I've excluded a good number of the technical steps in each part to get it working, but it's not anything a DevOps admin with an available afternoon and some patience couldn't stand up. If you'd like a more detailed walkthrough, Amazon took the time to provide a good one with Elastic Beanstalk here.

If you're looking to get your feet wet with Amazon and automating your deployment pipeline, just go ahead and get 'em wet.

If you're looking to get your feet wet with Amazon and automating your deployment pipeline, just go ahead and get 'em wet.

The harder parts come in architecting your application for high availability in the cloud world or architecting your platforms to scale in a way that doesn't cause those focused on the bottom line to shut down your project prematurely. Those kinds of decisions and evaluations are why you bring in someone Technically, awesome like Redapt to reduce risk and establish a best practice solution.

Automating your ability to build and release new code greatly increases the ability for your developers to take an innovative risk without the problems of needing to rapidly rollback in the case of failure. With a little forethought and an afternoon with AWS, you can have an automated deployment pipeline for your next app.

Automating your ability to build and release new code greatly increases the ability for your developers to take an innovative risk without the problems of needing to rapidly rollback in the case of failure. With a little forethought and an afternoon with AWS, you can have an automated deployment pipeline for your next app.

Categories

- Cloud Migration and Adoption

- Enterprise IT and Infrastructure

- Artificial Intelligence and Machine Learning

- Data Management and Analytics

- DevOps and Automation

- Cybersecurity and Compliance

- Application Modernization and Optimization

- Featured

- Managed Services & Cloud Cost Optimization

- News

- Workplace Modernization

- Tech We Like

- AWS

- Social Good News

- Cost Optimization

- Hybrid Cloud Strategy

- NVIDIA

- Application Development

- GPU